Category

Search

Popular

-

Ninja Luxe Café 3-in-1 Espresso, Drip Coffee, and Cold Brew Maker | Features Integrated Grinder, Milk Frother, Assisted Tamper, and Built-In Espresso Accessory Storage |...

Rated 4.90 out of 5

Ninja Luxe Café 3-in-1 Espresso, Drip Coffee, and Cold Brew Maker | Features Integrated Grinder, Milk Frother, Assisted Tamper, and Built-In Espresso Accessory Storage |...

Rated 4.90 out of 5$599,99Original price was: $599,99.$499,00Current price is: $499,00. -

Ninja CREAMi 13-in-1 Ice Cream and Soft Serve Maker with Scoop & Swirl, for Sorbet, Milkshakes, Frozen Yogurt, Low-Calorie Options & More, Includes Soft Serve Handle and (2)...

Rated 4.90 out of 5

Ninja CREAMi 13-in-1 Ice Cream and Soft Serve Maker with Scoop & Swirl, for Sorbet, Milkshakes, Frozen Yogurt, Low-Calorie Options & More, Includes Soft Serve Handle and (2)...

Rated 4.90 out of 5$349,99Original price was: $349,99.$279,99Current price is: $279,99. -

Lenox 830141 Holiday Serving Bowl for Christmas Entertaining

Rated 4.90 out of 5

Lenox 830141 Holiday Serving Bowl for Christmas Entertaining

Rated 4.90 out of 5$45,60Original price was: $45,60.$39,95Current price is: $39,95. -

ASUS ROG Strix G16 (2025) Gaming Laptop: 16” ROG Nebula 16:10 2.5K Display, 240Hz/3ms, NVIDIA® GeForce RTX™ 5080, Intel® Core Ultra 9 275HX, 32GB DDR5 RAM, 1TB PCIe Gen 4 SSD,...

Rated 4.90 out of 5

ASUS ROG Strix G16 (2025) Gaming Laptop: 16” ROG Nebula 16:10 2.5K Display, 240Hz/3ms, NVIDIA® GeForce RTX™ 5080, Intel® Core Ultra 9 275HX, 32GB DDR5 RAM, 1TB PCIe Gen 4 SSD,...

Rated 4.90 out of 5$2.899,99Original price was: $2.899,99.$2.299,99Current price is: $2.299,99. -

4-Foot Pre-Lit Artificial Kincaid Spruce Christmas Tree by National Tree Company, Green with Multicolor Lights and Stand Included

Rated 4.20 out of 5

4-Foot Pre-Lit Artificial Kincaid Spruce Christmas Tree by National Tree Company, Green with Multicolor Lights and Stand Included

Rated 4.20 out of 5$79,98Original price was: $79,98.$39,99Current price is: $39,99.

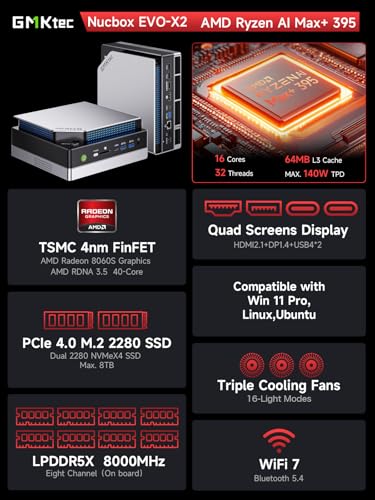

GMKtec EVO-X2 AI Mini PC Ryzen Al Max+ 395 (up to 5.1GHz) Mini Gaming Computers, 128GB LPDDR5X 8000MHz (16GB*8) 2TB PCIe 4.0 SSD, Quad Screen 8K…

$2.599,99 Original price was: $2.599,99.$2.199,99Current price is: $2.199,99.

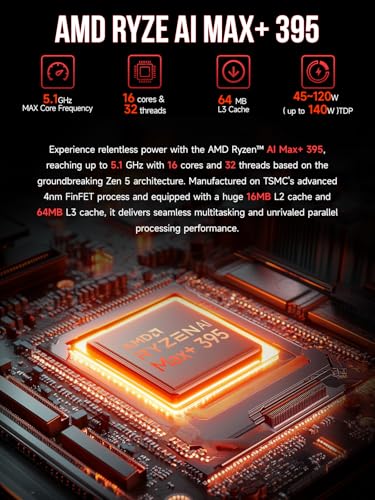

- EVOLUTION RYZEN AI MAX+ 395 MINI PC – GMKtec EVO-X2 is the next evolution in AI mini PC Ryzen Strix Halo series. Thanks to AMD Simultaneous Multithreading (SMT) the core-count is effectively doubled, to 32 threads. Ryzen AI Max+ 395 has 64 MB of L3 cache and can boost up to 5.1 GHz, depending on the workload. The Ryzen AI Max+ 395 is currently rated as the “most powerful x86 APU” on the market for AI computing.

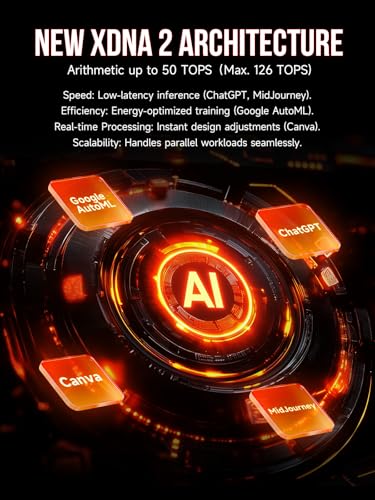

- AI NPU with XDNA 2 ARCHITECTURE – Powered by 16 “Zen 5” CPU cores, 50+ peak AI TOPS XDNA 2 NPU and a truly massive integrated GPU driven by 40 AMD RDNA 3.5 CUs, the Ryzen AI MAX+ 395 is a transformative upgrade and delivers a significant performance boost over the competition. The Ryzen AI Max+ 395 excels in consumer AI workloads like the llama.cpp-powered application: LM Studio. Shaping up to be the must-have app for client LLM workloads, LM Studio allows users to locally run the latest language model without any technical knowledge required and unleash their creativity and productivity.

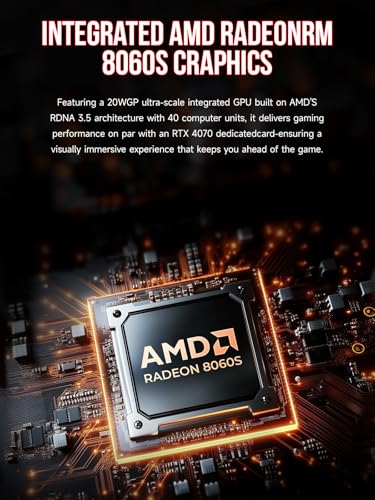

- AMD RADEON 8090S iGPU GAMING PC – The AMD Radeon RX 8060S offers all 40 CUs with up to 2.9 GHz graphics clock and uses the new RDNA 3.5 architecture. The powerful iGPU is positioned between an RTX 4060 and 4070 laptop GPU and therefore enables gaming in FHD at maximum details in most demanding games. The 8060S can also utilize the full 128GB pool, which is perfect for running LLMs such as Deepseek 70B Q8, which runs comfortably on this machine.

- EIGHT CHANNEL LPDDR5X – LPDDR5X is a new ground breaking memory small form factor installed on-board. With blazing speeds up to to 8000MT/s, it runs 1.5x faster than the DDR5 SODIMMs; 90% better performance over DDR5 SODIMMs in video conferencing and photo editing; 30% better performance in productivity apps; 12% better performance in digital content workloads.

- QUAD SCREEN 8K DISPLAY SUPPORT – EVO-X2 AI Mini PC support 4-screen 4K/8K output via HDMI 2.1 (8K@60Hz), DisplayPort 1.4 (4K@60Hz), and dual USB 4 40Gbps Transfer speed (supporting PD3.0/DP1.4/DATA). Ideal for gaming, video editing, and multitasking, it provides expansive and crisp multi-display support.

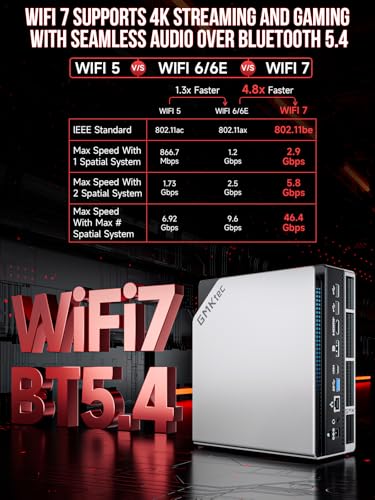

- FAST 2.5GBE + WIFI 7 + BT 5.4 – Ethernet 2.5GbE LAN port design provides more applications, such as firewall, multichannel aggregation, soft routing, file storage server, etc. Known as 802.11be, Wi-Fi 7 promises up to 46Gbps theoretical throughput, making it 4.8x faster than Wi-Fi 6 and 13x faster than Wi-Fi 5, while maintaining compatibility with older Wi-Fi versions at lower speeds. Built-in Bluetooth 5.4 is more stable and efficient to connect multiple wireless devices such as projector, printer, monitor, speakers and etc.

- TRIPLE COOLING FANS WITH LIGHTING – Dual turbo CPU fans + a massive DDR5/SSD cooling fan deliver silent, ultra-efficient cooling (just 35dB in Quiet Mode!), while the 3 advanced heatpipes and 360° airflow keep your Ryzen AI Max+ 395 Mini PC frosty under heavy loads. Plus, 13 dazzling RGB lighting modes let you personalize your rig’s vibe with the touch of a button! Cooler. Quieter. Brighter.

- THREE PERFORMANCE MODES – Switch seamlessly between Quiet (54W), Balanced (85W), and Performance Mode (140W) with just a tap of the dedicated power button for ultimate convenience—no BIOS hassle! See instant on-screen symbol confirmation when you shift modes. Unlock up to 96GB VRAM (via AMD software) for next-level AI and gaming, plus enjoy Auto Power On & Wake-on-LAN features. Power redefined.

- SD 4.0 CARD READER – The SD/TF 4.0 Card Reader ensures steady and efficient data transmission, supporting SD/TF 4.0 and UHS-II cards. Faster photo and video transfer speeds ensuring optimal work performance.

- GMKtec Warranty- GMKtec offers a 1-year limited warranty for each mini PC, starting from the date of the purchase. All defects due to design and workmanship are covered. With a professional after sales team always ready to attend to your needs, you can simply relax and enjoy your mini PC.

| Standing screen display size | 75 |

|---|---|

| Screen Resolution | 3840 x 2160 |

| Max Screen Resolution | 7680×4320 |

| Processor | 5.1 GHz ryzen_ai_max |

| RAM | LPDDR5X 8000MT/S |

| Memory Speed | 8000 MHz |

| Hard Drive | 2 TB PCIe 4.0 M.2 2280 SSD Dual Slots Max.4TB Each Slot |

| Graphics Coprocessor | AMD Radeon 8060S Graphics 40Cores RDNA3.5 |

| Chipset Brand | AMD |

| Card Description | Integrated |

| Graphics Card Ram Size | 128 GB |

| Wireless Type | 2.4 GHz Radio Frequency, 5 GHz Radio Frequency, 5.8 GHz Radio Frequency, 802.11ax, Bluetooth |

| Number of USB 2.0 Ports | 2 |

| Number of USB 3.0 Ports | 3 |

| Brand | |

| Series | EVO-X2 |

| Item model number | EVO-X2 |

| Operating System | Windows 11 Pro |

| Item Weight | 7.24 pounds |

| Package Dimensions | 15.07 x 9.92 x 3.7 inches |

| Color | |

| Processor Brand | AMD |

| Number of Processors | 16 |

| Computer Memory Type | DDR5 RAM |

| Flash Memory Size | 64 MB |

| Hard Drive Interface | PCIE x 16 |

8 reviews for GMKtec EVO-X2 AI Mini PC Ryzen Al Max+ 395 (up to 5.1GHz) Mini Gaming Computers, 128GB LPDDR5X 8000MHz (16GB*8) 2TB PCIe 4.0 SSD, Quad Screen 8K…

Only logged in customers who have purchased this product may leave a review.

Related products

-

HP 15s-fq5083ns – Ordenador portátil de 15.6″ Full HD (Intel Core i5-1235U, 16GB RAM, 512GB SSD, Intel Iris Xe Graphics, Sin Sistema Operativo)…

$754,66Add to basketPantalla FHD de 15,6″ (39,6 cm) en diagonal, bisel micro-edge, antirreflectante, 250 nits, 45 % NTSC (1920 x 1080) Procesador Intel Core i5-1235U (hasta 4,4 GHz con tecnología Intel Turbo Boost, 12 MB de caché L3, 10 núcleos, 12 subprocesos) Memoria RAM DDR4-3200 MHz 16 GB (2 x 8 GB) Almacenamiento de datos SSD de …

-

Beelink Mini PC SER5 Max 6800H AMD Ryzen R7 (Up to 4.7GHz) 32GB LPDDR5 500GB PCle4.0 SSD Mini Desktop Computer, Wifi6/BT5.2,HDMI/DP/Type-c/4K…

$429,00Original price was: $429,00.$339,00Current price is: $339,00.Add to basket【High Performance Business/Office Mini PC】Beelink SER5 Max Mini pc is equipped with the powerful AMD 6000 series processor – AMD Ryzen R7 6800H ( Up to 4.7GHz, 8Cores 16 threads, 16MB/L3 4MB/L2 Smart Cache) Handling daily tasks without stress: Office, Outlook, Access, Visio, PS, CAD, Ai and etc. Integrated with 12 core AMD Radeon 680M …

-

Samsung Galaxy Book4, Ordenador Portátil Ultrafino 15.6″ Full HD LED, Intel Core 5-120U, 8GB RAM, 512GB SSD, Intel Graphics, Windows 11 Home, Gris,…

$800,20Add to basketPantalla de 15.6″, Full HD LED 1920 x 1080 píxeles, Antirreflectante Procesador Intel Core 5 120U, 1.8 / 5.4 GHz, 12 MB Smart Cache Memoria RAM de 8GB Almacenamiento de 512GB SSD Tarjeta gráfica Intel Graphics Sistema operativo Windows 11 Home

-

GMKtec Mini PC Gaming, M7 AMD Ryzen 7 PRO 6850H (8C/16T 4.70Ghz) Dual NIC LAN 2.5G Desktop Computer, 32GB DDR5 RAM + 512GB Hard Drive PCle SSD,…

$489,99Original price was: $489,99.$429,99Current price is: $429,99.Add to basket【Premium Gaming PC Mini Computer】The Nucbox M7 Mini PC is a small form factor Desktop Micro Mini Computer with an AMD Ryzen 7 PRO 6850H (8C/16T up to 4.7Ghz) processor. The GPU is integrated with a powerful AMD Radeon 680M 12 Cores Graphics Card; performance is almost close to that of a full NVIDIA GTX …

Buy BTC for fixed amount

100,00 $

Best offers

Join Risk Free

30 days refund

100% Safe

Secure Shopping

24x7 Support

Online 24 hours

Best Offers

Grab Now

Free Shiping

On all order over

Know Us

🛍️ Torshop – Your ultimate marketplace for top tech, gadgets & gift cards! 💳🚀 We offer secure & private shopping with Bitcoin payments! 🛡️💰 Shop now! 🔗 torshop.online #Torshop #BitcoinShopping #CryptoDeals #TechStore #GiftCards

Read More

Mark –

For me, at least, it arrived on time and worked great without a messed up fan. Seems to run LLM quite well, I can get about 30 tok/sec out of gpt-oss 120. Runs games quite well too, although Oblivion Remastered showed me what the reviewers were complaining about with optimization. Got a little warm and plasticy-smelling running that game, but throttled at 70C, as it should. Only complaint is that they put the screws on the bottom under the feet, so hope you have a hairdrier handy if you want to stick in an extra SSD stick.

Eric D. Macarthur –

It took almost 6 weeks after ordering for one to arrive, and when it did it was dead on arrival. GMKtek support responded within a day, but nothing they suggested worked. So, all the waiting and troubleshooting just to ship it back. What fun. Not recommended

Donald Hawthorne –

I really don’t like the design. So many other AI Max Plus 395 computers have much better designs. It gets the job done. I wish I would have gone with the framework PC but they have a long lead I guess because the demand is so high. The fans on this will scream and sometimes out of nowhere. I could see if it’s under heavy load but sometimes just surfing the web or watching YouTube video the fans will spin up and these blower fans are so loud.

Mark D. –

It works fine with Vulkan and CPU, any LLM model using 100% GPU on ROCm will output gibberish and possibly crash the machine, so stick to Vulkan should you have any problems. Adding a second SSD is very easy, the screws to open it are located under the rubber pads.

Edward Lee –

The largest AI model I am able to load onto my GMKtec EVO-X2 into the LM Studio software outputs about 8 tokens/second:

Qwen3-235B-A22B-Instruct-2507-gguf-q2ks-mixed-AutoRound-inc

This mixture-of-experts AI model is also known as:

Qwen3-235B-A22B-Instruct-2507-128x10B-Q2_K_S-00001-of-00002.gguf

This is a 235 Billion parameter model with Q2_K_S automatic Intel quantization. I am able to load this model with 96GB of memory dedicated to VRAM and the following model config in LM Studio 0.3.20:

GPU Offload: 80 / 94

Offload KV Cache to GPU Memory: NO (slider to the left)

Keep Model in Memory: NO (slider to the left)

All other model config settings are at their default values, including the attempt to use mmap option, which seems to be necessary to load this particular model.

In the magnifying lens / Runtime window, I had to select:

GGUF: ROCm llama.cpp (Windows) v1.42

ROCm allows more layers to be offloaded to the GPU and allows this large model to be faster than the Llama 3.3 70B parameter model, which outputs about 5 tokens/second with Vulkan and with the test prompt, “Why is the sky blue?” The smaller models seem to run faster with Vulkan.

When you reboot the computer, you can repeatedly press the Esc key to enter the BIOS configuration and set 96GB for the graphics memory and activate Performance mode.

I learned that it is possible to activate the High Performance mode in Windows 11 by right clicking in the Windows menu and selecting:

Terminal (Admin)

A command prompt will then open. You can then enter:

powercfg -setactive SCHEME_MIN

I then went into the Control Panel / Power Options to change the advanced power settings for the “High performance” plan which was now unhidden. I set the “Turn off hard disk after” option to Never. I set the “Sleep after” option to Never. I set the “Minimum processor state” to 100%.

The output speed of Qwen3 235B increased to 8.7 to 8.8 tokens/second for the test question, “Why is the sky blue?” This output rate is faster than the rate at which I can read when I think about what the AI model is writing. Smaller AI models might output answers faster, but the answers tend to be wrong with greater frequency than larger AI models. Wrong answers delivered fast are useless to me.

Qwen3 235B sometimes outputs gibberish, such as, “GGGGG…” I don’t know if this is due to a memory shortage, a context window that is too small, a result of the quantization, or something else. I don’t seem to have enough memory left to increase the context windows size from the default 4,096. Keeping prompts short on one line seems to help prevent this issue.

08/04/2025 Update: I was able to increase the context window size by setting the options:

Context Length: 262144

Evaluation Batch Size: 256

Flash attention: YES (slider to the right)

The default Evaluation Batch Size of 512 seems to cause an eventual crash, but 256 seems stable. The speed of Qwen3 235B gradually slows down as the context window fills up. After about 27,000 tokens are in the context window, the output speed drops to about 1 token/second, so 27,000 is about the practical limit for the context length unless you are patient.

I deleted OneDrive and the Recall feature. In the Edge browser, I disabled the Settings / System and performance / System / “Startup boost” option to prevent Edge from staying resident in memory all the time. I also disabled the option for “Continue running background extensions and apps when Edge is closed”. I ran the msconfig program to disable the Print Spooler and some other print-related process which I will never use on this computer.

8/5/2025 Update: I was able to load the new AI model:

openai/gpt-oss-120b

The GGUF files come in 2 parts:

gpt-oss-120b-MXFP4-00001-of-00002.gguf

gpt-oss-120b-MXFP4-00002-of-00002.gguf

This is a 120 Billion parameter GPT Open Source Software version from OpenAI with MXFP4 quantization. The size listed in LM Studio is 63.39GB.

In the BIOS, I had to select 64GB dedicated to graphics memory. I am still able to load Qwen3 235B, but the output speed drops to about 7 tokens/second for the test question, “Why is the sky blue?” For Qwen3 235B, it seems better to have 96GB dedicated to graphics memory. The output speed of openai/gpt-oss-120b is about 10 tokens/second for the same question.

The following is the openai/gpt-oss-120b model config in LM Studio 0.3.21:

Context Length: 30000

GPU Offload: 36 / 36

Evaluation Batch Size: 4

Offload KV Cache to GPU Memory: NO (slider to the left)

Keep Model in Memory: NO (slider to the left)

Try mmap: NO (slider to the left)

All other model config settings are at their default values. Attempting to offload the KV cache to GPU memory results in a failure to load. Attempting to activate Flash memory results in a failure to load.

Setting the mmap option to NO seems to result in much more RAM being available once the model is loaded, dropping from about 57GB used to less than 5GB used according to the number in the lower right corner of LM Studio.

In the Magnifying lens (Discover) / Runtime window, I had to select:

GGUF: Vulkan llama.cpp (Windows) v1.44.0

Attempting to use the ROCm llama.cpp v1.43.1 causes the openai/gpt-oss-120b model not to load. So, if I switch between loading Qwen3 235B and openai/gpt-oss-120b, I must choose the version of llama.cpp that works with each model.

8/7/2025 Update: I was occasionally getting an error in GPT after it had finished outputting a response. The error message was something like: “Unexpected end of grammar stack after receiving input: G”. Repeated outputs of the letter G, such as, “GGGGG…”, seems to be related to the Evaluation Batch Size, which seems to be stable at a value of 4 for the GPT model. I used a variation of the following prompt and kept dividing the Evaluation Batch Size by 2 until I no longer got the error message:

Please write a story about people who wake up from suspended animation in another star system. The study of Arctic and Antarctic fish allowed scientists to develop an artificial protein that inhibits ice formation. The people left Earth due to warfare and did not have time to prepare. The ship’s AI still thinks it is near Earth in the past, because that is when the AI was last updated while its atomic clock still functioned. The atomic clock was hit with shrapnel in orbit, caused by anti-satellite weapons, that was recorded as a micro-meteorite strike. Automated systems sealed the hole in the hull, but the clock remained non-functional. Any watches worn by the people in cryochambers caused frost injuries to the skin where bare metal contacted skin. The watches were broken by the cold in the cryochambers. Any watches that were stored outside the cryochambers either have depleted batteries or have not been regularly wound by movement in the case of automatic watches. Analog watch hands are halted at various time indications. An automated routine in the cryochambers wakes the crew up independently of the main AI system. Any views of the outside are through cameras and sensors which are controlled by the AI. A crew member tells the AI system to start up the main systems, but the AI system refuses to do so, because the AI refuses to accept that time has passed and thinks the crew is discussing a hypothetical scenario. The crew must either convince the AI that time has passed or somehow bypass the AI’s control over the ship to survive. Bypassing the AI will result in loss of navigation and dynamic fusion containment. The AI shows the crew a picture of the Earth before they reached escape velocity as if the Earth were outside in the present. The image causes one crew member to believe that they are still on Earth. The crew member’s mental illness had been well treated on Earth before the war made prescription refills impossible. The crew attempt to improvise a new clock for the AI, but the AI recognizes the inaccuracy of the clock and ignores the clock. The crew attempt to manually restart the fusion reactor but abandon the attempt when they almost lose containment. All hope seems to be lost. However, the improvised clock allows the AI’s predictions, which are analogous to dreams or hallucinations in humans, to move forward in time. Previously, the AI’s predictions had all been overlaid on top of each other at the same instant in time. The AI decides on its own to search for a pulsar as a time reference.

8/10/2025. I feel the need to respond to Bill Bohn’s review of a computer which he apparently does not own. There is nothing misleading in my review. The numbers are what they are. If you actually had a GMKtec EVO-X2, then you could reproduce my numbers. Moonshot Kimi-K2 is simply wrong when it writes, “The reviewer is running a GMKtec EVO-X2 mini-PC that houses a Ryzen 9 8945HS (8 Zen 4 cores + Radeon 780M iGPU) plus 96 GB of system DDR5 and BIOS UMA set to 96 GB.” Moonshot Kimi-K2 is incorrectly identifying the processor, the graphics unit, and the amount of memory the GMKtec EVO-X2 computer has. The processor is a Ryzen AI Max+ 395 which has 16 processor cores, not 8. The graphics processor is an integrated AMD Radeon 8060S, not 780M. The GMktec EVO-X2 has 128GB of total system memory, not 96GB. A maximum 96GB of the 128GB total system memory can be allocated for VRAM. Why are you reviewing a computer that you apparently do not own and apparently know nothing about? Do not blindly accept whatever information an AI system gives to you.

8/11/2025. I have changed the previous Evaluation Batch Size from 16 to 4 for the gpt-oss-120b model. This seems to be most stable setting so far.

8/17/2025. The gpt-oss-120b model from OpenAI now outputs 36 to 40 tokens/second for the prompt, “Why is the sky blue?” This speed increase was made possible by selecting the new ROCm llama.cpp v1.46.0 and choosing 96GB of memory to be allocated to video memory in the BIOS. Thanks to whomever made this dramatic speed increase possible. What’s funny is gpt-oss-120b tells me it is impossible for consumer-level computer hardware to achieve this speed.

In the gpt-oss-120b settings, I set:

Context Length: 63000

GPU Offload: 36/36

Evaluation Batch Size: 256

Offload KV Cache to GPU Memory: YES (slider to the right)

Flash Attention: YES (slider to the right)

In the magnifying lens / Runtime setting, I set:

GGUF: ROCm llama.cpp (Windows) v1.46.0

All other parameters are at their default settings for gpt-oss-120b.

Qwen3 235B now outputs about 9 to 10 tokens/second. The new version of ROCm llama.cpp v1.46.0 seems to allow 81 layers to be offloaded to the GPU instead of 80 layers. My model settings for Qwen3 235B are now:

Context Length: 30000

GPU Offload: 81/94

Evaluation Batch Size: 128

Offload KV Cache to GPU Memory: NO (slider to the left)

Keep Model in Memory: NO (slider to the left)

Flash Attention: YES (slider to the right)

In the magnifying lens / Runtime setting, I set:

GGUF: ROCm llama.cpp (Windows) v1.46.0

All other parameters are at their default settings for Qwen3 235B.

Currently, there seems to be no substitute for experimenting with the various parameters to get a stable configuration in LM Studio. The parameters that AI models recommend do not work. I recommend dividing the Evaluation Batch Size by 2 if you get gibberish from one of these large AI models or if the AI model crashes.

8/26/2025. After upgrading to AMD Adrenalin 25.8.1, the gpt-oss-120b AI model stopped working with ROCm llama.cpp v1.46.0 in LM Studio 0.3.23. I am still able to use Vulkan llama.cpp v1.46.0 but at a reduced output speed of about 33 tokens/second for the test prompt, “Why is the sky blue?” You might want to set a System Restore point before upgrading the AMD Adrenalin software so that you can go back to a faster configuration if the upgrade degrades inference speed.

9/10/2025. I installed a second 4TB NVME drive made by Western Digital after removing the rubber feet to reveal the screws. I followed the instructions of gpt-oss-120b for running a free Windows program named Rufus to make a bootable USB drive with a Debian installation *.iso file extracted to the USB drive and booting from the drive. Everything was going well until I had to enter my wireless network password. After repeatedly entering the correct password, the installation would not advance beyond the DHCP configuration. Updating the computer’s BIOS did not help. I discovered I had to select, “None”, as the driver for my wired network adapter. That option comes up somewhere in the non-graphical installation process. The wireless network Debian installation then worked.

The gpt-oss-120b AI model outputs at 47 tokens/second in LM Studio 0.3.25 running in Debian Linux with the Gnome graphical interface for the prompt, “Why is the sky blue?”

Gnome has an option for High Performance mode, similar to Windows, which I activated.

I set the magnifying glass / Hardware / Memory Limit value to 110.

The Runtime / GGUF setting is Vulkan llama.cpp (Linux) v1.50.2. For some reason, the ROCm llama.cpp v1.50.2 program does not work in Debian Linux with the EVO-X2 computer at present.

The gpt-oss-120b model settings in LM Studio in Debian Linux are:

Context Length: 30000

GPU Offload: 36/36

Evaluation Batch Size: 127

Keep Model in Memory: NO (slider to the left)

Try mmap(): NO (slider to the left)

Flash Attention: YES (slider to the right)

All other gpt-oss-120b model parameters are at their default settings.

10/1/2025. LM Studio 0.3.27 running in Debian “Trixie” Linux automatically installed ROCm llama.cpp (Linux) v1.52.0 and selected that as the engine for GGUF even though ROCm is currently not supported in the Debian “Trixie” edition. The Vulkan llama.cpp (Linux) v1.52.0 apparently uses more memory than v1.50.2 and causes the output speed of gpt-oss-120b to plummet to around 2 tokens/second. This is a step in the wrong direction. Luckily, I could scroll down in the All tab of the Runtime window and click on the “…” next to Vulkan llama.cpp (Linux) and select, “Manage Versions”, and delete Vulkan llama.cpp v1.52.0 to make v1.50.2 the default. I also had to uncheck the, “Auto-update selected Runtime Extension Packs”.

jack –

Performance is excellent. Im scoring 12k CPU and GPU on Timespy and all the while drawing only 150W. Stable Diffusion performance is amazing per watt but still nothing compared to my 14900K/4090, not that it is expected, though it seems to be roughly 3x the power efficiency which is definitely impressive. I left the RAM set at 64GB allotted for the GPU (VRAM) and so far even with Stable Diffusion its not used more than 28GB.

Temps stock under maximum load hit thermal throttle by a very small margin but after an upgrade to PTM7950 it wasn’t throttled once. Thermal paste was a bit like cake frosting so its definitely advised to replace, especially with PTM7950. If you run on stock paste, maybe leave the UEFI/BIOS power setting on balanced to keep temps in check.

My system came with an Adata Legend 900 2TB NVMe SSD which performs pretty decent so I recommend leaving it as the boot drive. I added a 4TB Samsung 990 Pro for my working drive.

Jeff G. –

If you know what this is for… it works as advertised. Linux and LM studio ftw. Wish it had occulink hence the 4 stars. Otherwise.. perfect. Runs windows well too but… no one wants Win11 really.. so..

Eric D. Macarthur –

Bought this as laptop replacement along with USBC portable monitor for CG content creation, LLM and Gaming.

Stripped down the OS and Couldn’t be happier. Handles all well. Current AAA games no problem.

Well ventilated with ports on both sides.

Bought a DJ travel case to house our two setups for travel.

BIOS is a bit simplistic but fan control software is not hard to get.

Truly Surprised how good this APU works. I don’t plan to upgrade my workstation for a while as this almost as powerful as my current that’s just a few months old.